05. Steering Lineage

05.1 | Core Methodology: Influence Mapping

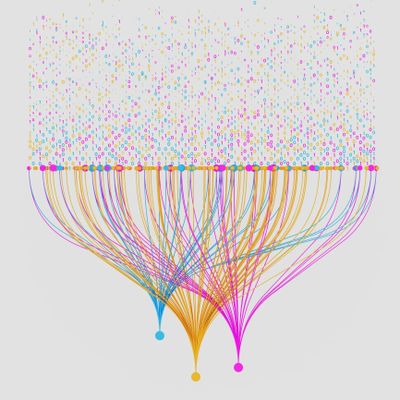

Reinforcement Signal Tracking

This module tracks every manual or automated steering command applied to the model to visualize the evolution of neural behavior. By mapping these reinforcement signals, we provide a clear history of how specific guidance has shaped the current state-space. This ensures that the model’s trajectory remains transparent and that the influence of every 'steering' event is forensically accounted for.

05.2 | Institutional Alignment: Bias Attribution

Alignment Directionality & Drift Analysis

Institutional values are protected through vector drift analysis, which identifies intentional or accidental bias in model tuning. By analyzing the directionality of steering commands, the module ensures that model adjustments remain aligned with core institutional standards. This proactive audit identifies bias at the source, preventing the development of maladaptive decision-making paths.

05.3 | Data Sovereignty: Reversion Protocols

Historical State-Space Restoration

Rooted in my Columbia University research into information sovereignty, this module allows auditors to roll back a model’s steering to a previous 'clean' state. This snapshot recovery protocol ensures that baseline progress is never lost due to corrupted steering paths. By maintaining a library of sovereign model states, we protect the integrity of the institution's proprietary AI assets.

05.4 | Forensic Logging: Fine-Tuning Oversight

Parameter Integrity & Repeatability

To ensure a transparent foundation for AI logic, the suite logs every hyperparameter used during fine-tuning sessions. This audit ensures that the model's 'personality' is constructed through repeatable and verifiable methods. Every fine-tuning event is documented as an immutable forensic record, providing full accountability for the model’s evolved behavior.

05.5 | Technical Documentation: Open Source Verification

Lineage Tracking Source Code

The Python framework for lineage tracking and the historical steering logs are available for independent verification on our GitHub repository. This documentation details the algorithms used to map model influence and ensure the sovereign evolution of the 'Neural DNA'. Transparency in steering is critical for maintaining long-term institutional trust.

By documenting the evolution of model guidance, Steering Lineage ensures full accountability for AI behavior. Once the lineage is mapped, the suite moves to 06. Population Coder to audit distributed neural patterns.

This website uses cookies.

We use cookies to analyze website traffic and optimize your website experience. By accepting our use of cookies, your data will be aggregated with all other user data.